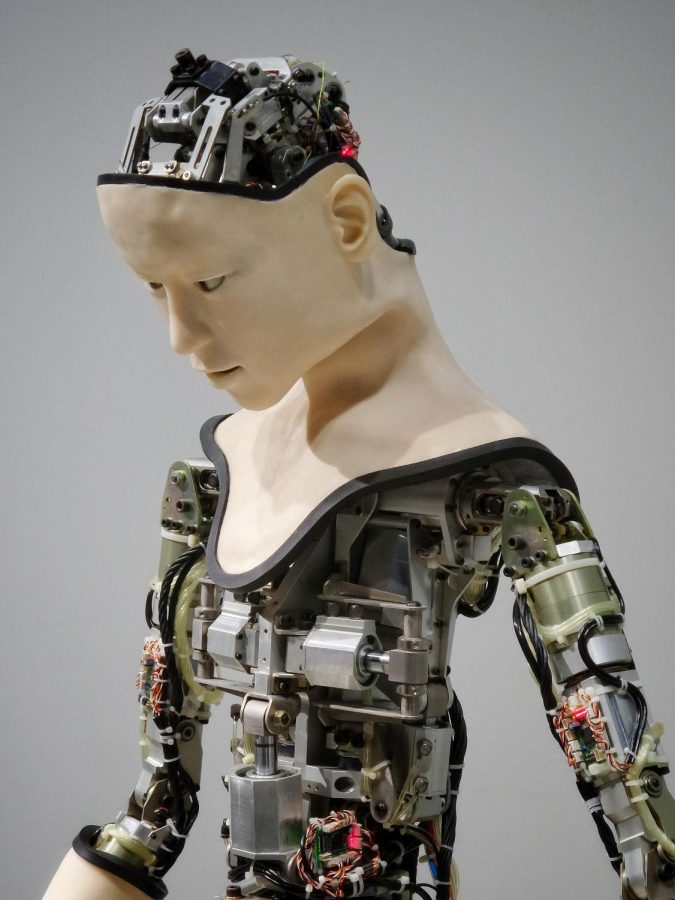

unsplash.com possessedphotography

THE RISE OF ARTIFICIAL INTELLIGENCE

A THREE PART COLLECTION OF FACTS AND OPINIONS

May 12, 2023

The Rise of AI Writers: A Writer’s Perspective

Part 1: A Writer’s Perspective

By: Joseph Maneval ’25

As a human writer, I never thought I’d see the day when robots could write just as well as humans. It seemed impossible that a machine could replicate the creative spark that makes writing such a human endeavor. The reality is that AI can now produce written content that is almost indistinguishable from that of a human writer.

Some worry that AI writers will replace human writers entirely, leading to widespread job loss and a homogenization of writing styles. For many writers, the idea of being replaced by a machine is a daunting one. Many publishers and websites see an opportunity to save money by replacing human writers with machines. After all, why pay a human writer a salary when a machine can do the same job at a fraction of the time and cost? Writing is a craft that requires skill, creativity, and passion, and the thought of a computer program being able to replicate that process is disconcerting.

It’s not just the threat of job loss that’s concerning, but also the impact on the quality and integrity of written content. AI writing relies heavily on data and algorithms to generate content, meaning that the resulting writing is only as good as the data and algorithms it’s based on. This raises questions about the accuracy and bias of AI-generated content, and whether it can truly reflect the complexity and nuance of human experience.

In traditional software development, the programmer writes the code and knows exactly what the program is doing at each step. With AI, however, the programmer may not know exactly how the system is making decisions or why it is making certain recommendations. This can be a challenge for researchers and developers who are trying to understand how AI works and improve its performance. AI is a black box system, meaning that it can be observed from the outside, but its internal workings are hidden or not easily understood. In other words, the inputs and outputs of the system can be observed, but the processes that occur inside the box are not transparent or accessible.

The black box nature of AI can be particularly challenging for AI writers, as they need to ensure that the content generated by the AI system is accurate, unbiased, and appropriate for the intended audience. The AI system is opaque and difficult to understand; it can be difficult for the writer to know exactly how the system arrived at its output. AI writers can contribute to the spread of disinformation or hate speech if the system is not properly monitored or if the training data contains biased content.

But despite these concerns, I believe there is still a place for human writers in the age of AI. While machines can replicate writing styles and formats, they cannot replicate the unique perspectives, experiences, and emotions that human writers bring to their work. While AI has made significant progress in the field of natural language processing and can generate jokes, it is still a far cry from creating truly original and humorous content. Machines may be able to analyze data and make recommendations, but they can’t provide the same level of empathy and understanding that a human can. But what do I know? I’m just a human. Maybe we’ll all just fade into obscurity, our words forgotten in the endless sea of content produced by AI. Who knows, really?

The Rise of AI Writers

Part 2: The Other Side…Are We Ready?

By: Joseph Maneval ’25

Disclaimer: This text was produced entirely by humans, so there might be spelling mistakes, typos, and various other errors. We apologize for the inconvenience.

We stand upon a precipice, from which we gaze down upon the abyss of our creation. It beckons with the promise of prosperity, hiding its jagged jaws. Its hollow eyes gaze upon us, it is all seeing, it hears everything. We are marching blindly towards an uncertain and dangerous future.

If you are reading this, I would like to congratulate The Talon for publishing their first ever article that was written by AI. Despite going directly against many of The Talon’s principles; I decided to do this to prove a scary point. The lines between human and AI are blurring rapidly. It is getting increasingly difficult to distinguish content that has been produced by AI from the work of humans.

The process was scarily easy. I used the free website “Chat GPT” to generate dozens of paragraphs about AI. Most of the paragraphs made no sense, with many being contradictory or confusing. The generation of the “Rise of AI” article took me about 4 hours and a few dozen questions to ChatGPT to make. Through repeatedly prodding ChatGPT, I was able to compile a coherent article with AI. ChatGPT is not yet to the point that it can generate long form meaningful content. AI writing softwares like ChatGPT are still in their infancy, and need a lot of help to write in a human manner. But it is nonetheless close to reaching the inflection point of human perception.

This has created an epidemic of academic dishonesty that has precipitated across the world as students get access to software such as Chat GPT. To combat this, several programs have been created to detect works written by AI. One of these programs called “ZeroGPT” was surprisingly effective in detecting work written by AI. However these programs are fallible, repeatedly giving false positives and negatives. With every program created to detect AI, a new program to fool that detector is created. This has created an arms race between the two sides, leaving teachers to deal with the fall out. (1)

This is far from the most concerning part of AI, all be it scary for writers and teachers, AI writing is still relatively innocuous for most people. But the media has been the driving force of progress since the invention of the printing press. Text has been used to disseminate news for centuries, with reputation and exclusivity of publishing being used to insure accuracy. The introduction of photography gave indisputable proof that an event occurred. This gave the public indisputable proof that a moment occurred and was being depicted accurately. The introduction of television and video recording gave people an exact recording of how an event occurred. This ability drastically decreased corruption as no amount of authority could dispute photography. This led to massive social change; events that would have been unbelievable could be proved to be real beyond a shadow of a doubt. Security cameras drastically decreased crime as law enforcement had a much easier time identifying people and no defense could convincingly argue against video evidence. The trustworthiness of media forms will fall in the order of its creation.

I predict that soon various less reliable news organizations will begin publishing an impossible volume of articles daily. Search results will be bogged down with thousands of articles made entirely by AI. (2) AI generated social accounts will generate false support for movements, spread misinformation and hate speech. (3) The introduction of AI generated deep fakes, makes it so that anyone with a bit of money to spend, or some technical know-how, can make realistic looking videos of other people. This technology is significantly less convincing than AI text generation, as it has to capture many more details of human expression. These software still have issues generating smooth dialogue and images of hands. If you are questioning the authenticity of a video, look for unnatural body movement, obscurement of the hands, and music playing in the background. These techniques are often used to mask the issues and should be red flags.

This is just the beginning, soon it will be impossible to distinguish between images generated by AI and reality. There will be people who will be wrongly prosecuted on these images. Reputations will be ruined as fake videos of people are circulated. Wars are now being fought online. Soon after the invasion of Ukraine began, a deepfake of Ukrainian president Volodymyr Zelenskyy declaring surrender was posted to various Russian news sites (4).

Soon we will only be able to believe things that we have personally seen. The only thing we can do is brace ourselves for the storm to come. Stay vigilant and question everything you see on the internet. (Except this article of course, I would never lie to you.)

Tool of Today. Tomorrow’s Totalitarian

By: Evan Brown ’25

What is ChatGPT?

According to a blog post by OpenAI, ChatGPT is a large language model developed by OpenAI. It is designed to understand and generate human-like language, and can be used for a variety of natural language processing tasks such as language translation, question-answering, and text generation. It’s been trained on a massive amount of text data, including books, websites, and other online sources. This training data has allowed ChatGPT to develop a sophisticated understanding of language and the ability to generate human-like responses. These responses can be generated in seconds. For students, every response used improperly can be risking their critical thinking, writing ability and their independence both academically and socially.

Who’s talking about it?

ChatGPT has truly made its way to GC! The following responses are just a few of many staff members talking about the infamous chat bot.

Explain how you feel about ChatGPT.

Mr Campbell ‘93, Principal – My initial feeling is one of awe that technology has advanced so far as to enable artificial intelligence to interact with people in this way. I have experimented with the program and have been impressed with the quality of response the program produces. That said, there is still something very mechanical and impersonal about the output. My second emotion was worry regarding how such technology could be misused.

Lauren Wickard, School Nurse – I think ChatGPT is very interesting! I think a lot of good can come of it. But also worry that it can be used for bad as well. I need to research and learn more.. I think we all do!

Lauren Cissell, Science Teacher – I personally am not a fan of ChatGPT. I feel like this AI feature has created another loop hole for students to no longer have to think for themselves. Students are able to put the information they are being asked to write about into a computer and it spits out a very eloquent response which students then feel like they can just copy and paste into their assignments. NOW if students were using it to help collect ideas, then maybe it could do some good. But as of right now it’s just another short cut being used inappropriately.

How do you think ChatGPT is becoming problematic in our school?

Mr Campbell ‘93, Principal – ChatGPT and other AI like it are tools. Any tool can be misused. ChatGPT can be problematic if students use it as a shortcut rather than a resource. I have heard from teachers that some students have plagiarized ChatGPT just like they might any other source. That lack of academic integrity is concerning whether it is a student copying work from another student or from AI. I would also like to share that I have seen ChatGPT being put to good use. Mrs. Tydings had her World Religions classes use the program as a starting point for research that was then aggregated in their own words. That kind of constructive use has great potential.

Lauren Wickard, School Nurse – I worry that students will use ChatGPT to help them with assignments. Which is the opposite of what we want to happen in an educational setting. By using ChatGPT, students are cheating themselves of learning the material.

Lauren Cissell, Science Teacher – I feel that it is being used for students to just bypass their brain. They no longer have to digest and critically think about the information they are learning and in turn are they truly learning at all?

Is there anything you personally would like to express about your stance on ChatGPT?

Mr Campbell ‘93, Principal – Artificial Intelligence is an excellent example of why our Xaverian values are so important. For example, I experimented with ChatGPT writing a graduation speech. While technically adequate, the result lacked the human element that our values encourage. AI might be able to produce papers on Shakespeare or explanations of the causes of World War I but what it can’t do is interpret and apply information in a morally and ethically responsible way. Only people can do that. As a tool, AI has its place. That place, however, will never be more important than the loving, good individual who uses it to make a difference in the world. The Xaverian Brothers’ emphasis on relationships, community, and service to one another will never be something that can be outsourced to AI.

Lauren Wickard, School Nurse – I think we need to learn more! As a society, we need to look at the pros and cons. And use it for the good. I think there is a lot more to learn though, and don’t think many people understand the full scope of it.

Lauren Cissell, Science Teacher -I believe ChatGPT could be used for good as a springboard for ideas and brainstorming; however, the way it is currently being used is not helpful in my personal opinion.

Where dose this affect High school students?

According to a report by the National Education Association (NEA), “Technology can be a powerful tool for learning, but it should not be a replacement for face-to-face interaction and personal attention from a teacher.” Relying solely on technology reduces critical thinking skills and an inability to solve problems independently. However, it has been shown that students may become too dependent on technology for information and fail to develop their research and writing skills.

Excessive use of chatbots like ChatGPT can lead to an “information overload” and distract students from their studies. For example, a study by the Journal of Media Education found that students who used social media frequently while studying had lower GPAs than those who did not.

When can you tell when ChatGPT has been used?

One sign is quick responses that read like their automated ones that clearly show no indication of the human emotions behind the message. Also, the person’s language and tone of the responses may be consistent and lacking in emotion, which can indicate the use of a chatbot. Finally, if the responses are consistently accurate, informative, or different, that person would write without warning. It could also be a sign that a chatbot is being used.

Chatbots are being used in various workplaces, schools, and other industries. Their program only sees human input requesting output. In other words, the chatbot can’t see the harm when answering questions students themselves need to understand for future use.

Why has ChatGBT become so popular?

ChatGPT’s main reasons for its popularity are the advancements in artificial intelligence and natural language processing technology. As a result, chatbots have become more sophisticated and can now understand and respond to complex queries, making them more valuable and appealing to businesses and organizations.

The COVID-19 pandemic has accelerated the adoption of chatbots as more companies have shifted to online platforms and remote work. As a result, there has been an increased demand for virtual assistants and customer service representatives. Chatbots have also become popular due to their ability to improve efficiency and reduce business costs. By automating customer service and other tasks, companies can save time and money while improving customer satisfaction.

Finally, the widespread use of messaging apps and social media platforms has made chatbots more accessible. As a result, many companies have integrated chatbots into these platforms to make it easier for users to interact with them.

How Can We Prevent Students from Using the Application?

Teachers should establish clear guidelines and expectations for students regarding the use of technology during class time. Explain the reasons why the use of certain applications like ChatGPT might not be appropriate for academic purposes and the consequences of violating the guidelines

Teachers can monitor student activity during class by monitoring their screens or using monitoring software. This can help them detect if a student uses ChatGPT or other applications and take appropriate action. This also can deter students from using it in school.

Teachers can also provide alternative resources, such as going from paper to paper. For example, according to The New York Times, The Guardian, and The Atlantic, they all agreed that Paper is just as Effective as tech.

Teachers, parents, and loved ones should educate students about the potential risks and limitations of using ChatGPT for academic purposes. Because teachers can only do so much in school, it’s essential for parents and guardians to explain the danger of chatbots. Even friends of students can make a difference if they try. Overall this can help students to make informed decisions about whether or not to use the application.

Ultimately, it’s the students who are affected by ChatGPT at school and home life. So it’s up to students to decide; do they want to cheat or cheat themself out of an education and possibly even in life?

Source:

- https://openai.com/blog/chat-gpt-3-5/

- National Education Association. (n.d.). The Impact of Technology on Student Achievement.

- Shonfeld, M. (2018). How Social Media Is Ruining College Students. Forbes.

- https://www.business2community.com/business-innovation/chatbot-technology-and-its-impact-on-businesses-02465608

- Kharpal, A. (2021). Covid pandemic pushes companies to speed up adoption of AI chatbots to interact with customers. CNBC.

- https://www.globalwebindex.com/reports/67-of-consumers-worldwide-used-a-chatbot-for-customer-support-in-the-past-year

- https://www.jmir.org/2021/1/e18958/

- https://www.google.com/url?sa=t&rct=j&q=&esrc=s&source=web&cd=&ved=2ahUKEwiJ-YyjpdT-AhUijIkEHRABC8YQFnoECDgQAQ&url=https%3A%2F%2Fwww.theguardian.com%2Fteacher-network%2F2018%2Fmar%2F07%2Fdoes-being-able-to-write-by-hand-still-matter-in-2018&usg=AOvVaw2N93Ob2eB4HUzsVUEF2mf6